|

|

| Line 3: |

Line 3: |

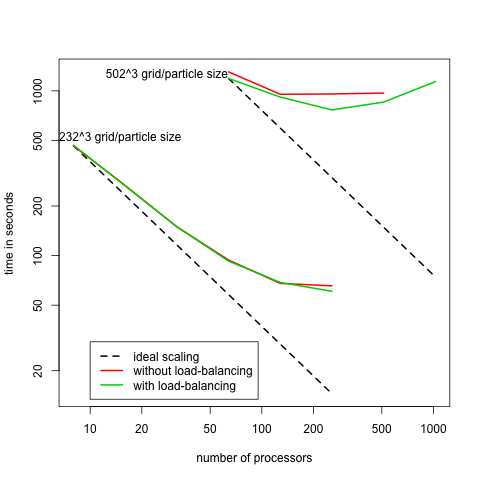

| | On this page will show the performance result for ENZO from the svn repository. We did this in par to see the effect of load balancing (not enabled in version 1.5) on scaling performance. The previous performance results for ENZO version 1 are at [[EnzoV1Performance | here]]. | | On this page will show the performance result for ENZO from the svn repository. We did this in par to see the effect of load balancing (not enabled in version 1.5) on scaling performance. The previous performance results for ENZO version 1 are at [[EnzoV1Performance | here]]. |

| | | | |

| − |

| |

| − | This is a short overview of the performance result from the ENZO application. For each experiment we used these inits/param files:

| |

| − |

| |

| − | * [http://giusto.nic.uoregon.edu/~scottb/SingleGrid_dmonly.inits inits]

| |

| − | * [http://giusto.nic.uoregon.edu/~scottb/SingleGrid_dmonly_amr.param param]

| |

| − |

| |

| − | This is a relatively small experiment but was sufficient to generate some interesting performance results. For this study we used the [http://tau.uoregon.edu TAU Performance System®] to gather information about ENZO's performance, in particular we are interested in the performance of the AMR simulation at scale. We ran these experiments on NCSA's Intel 64 Linux Cluster (Abe).

| |

| − |

| |

| − | ==TAU Measurement overhead==

| |

| − | Here is a short table listing the run-times for various experiments and the instrumentation overhead observed. Each run was on 64 processors (8 nodes).

| |

| − |

| |

| − | {|

| |

| − | |-

| |

| − | ! Run Type

| |

| − | ! Runtime (seconds)

| |

| − | ! Overhead %

| |

| − | |-

| |

| − | |Uninstrumented runtime

| |

| − | |1072

| |

| − | |NA

| |

| − | |-

| |

| − | |Trace of only MPI event

| |

| − | |1085

| |

| − | |4.8%

| |

| − | |-

| |

| − | |Profile of all significant events

| |

| − | |1136

| |

| − | |6.0%

| |

| − | |-

| |

| − | |Profile with Call-path information

| |

| − | |1196

| |

| − | |11.6%

| |

| − | |-

| |

| − | |Profile of each Phase of execution

| |

| − | |1208

| |

| − | |12.7%

| |

| − | |}

| |

| − |

| |

| − | ==Runtime Breakdown on 64 processors==

| |

| − |

| |

| − | Here is a chart showing the contribution each function makes to the overall runtime. Notice that MPI communication time takes over 60% of the total runtime.

| |

| − |

| |

| − | [[Image:MeanFunctionLinux.png]]

| |

| − |

| |

| − | ==Experiment Scalability==

| |

| − |

| |

| − | These chart show the relative efficiency for a grid size of 128^3 and 256^3. Relative efficiency is the measure of how far an run of the application is slower compared to ideal efficiency. In this case, ideal efficiency would mean a doubling of the processor count would reduces the runtime in half.

| |

| − |

| |

| − | [[image:Scaling128.png]] [[image:Scaling256.png]]

| |

| − |

| |

| − |

| |

| − | This is chart show the breakdown in the runtime of different functions across different numbers of processors (128^3 grid size). MPI communication time, like in the 64 processor case, continues to dominate the runtime--and to an even greater extent when a larger number of processors are involved.

| |

| − |

| |

| − | [[image:MeanRuntineAtScale2.png]]

| |

| − |

| |

| − | ==Experiment Trace==

| |

| − | This graphic shows how load imbalances causes long wait times for MPI_Allreduce. Some processors are experiencing as much as 8 seconds of wait time per reduce.

| |

| − |

| |

| − | [[Image:trace.png|1000px]]

| |

| − |

| |

| − | ==Experiment Call-Paths==

| |

| − | We observe the follow relationships in the experiment call-path:

| |

| − |

| |

| − | * Almost all the time spend in MPI_Bcast is when it is called from MPI_Allreduce.

| |

| − | * Almost all the time spend in MPI_Recv is when it is called from grid::CommunicationSendRegion.

| |

| − | * Most all the time spend in MPI_Allgather is when it is called from CommunicationShareGrids.

| |

| − | * Almost all the time spend in MPI_Allreduce is when it is called from CommunicationMinValue.

| |

| − |

| |

| − | This chart show the details:

| |

| − |

| |

| − | [[Image:CallpathRuntime3.png]]

| |

| − |

| |

| − | ==Experiment Phases==

| |

| − | We also looked at ENZO's runtime through each iteration on the main loop in EvolveHierarchy. Here is the CommunicationShareGrids function representing the computation work done during the consecutive loops (time is microseconds)

| |

| − |

| |

| − | [[Image:CommunicationShareGrids2.png]]

| |

| − |

| |

| − | Notice that some iterations are involved in writing out the grid (lots of time spent in WriteDataHierarchy).

| |

| − |

| |

| − | Here is a breakdown, by function, of the time spent over the course of the experiment. Y-axis is exclusive time spend in each function, and X-axis is overall elapsed runtime:

| |

| − |

| |

| − | [[Image:snapshot.png|1000px]]

| |

| | | | |

| | | | |